Tracing & Debugging your Langchain Apps using Langtrace

Yemi Adejumobi

⸱

Platform Engineer

May 28, 2024

Since we introduced support for LlamaIndex, our community has eagerly awaited another major update. We're thrilled to announce that we've added support for Langchain, the popular framework for building and iterating on AI/LLM applications. With over 85,000 GitHub stars, Langchain has become a staple in the LLM community. Our integration with Langchain is a testament to our commitment to providing developers with the tools they need to succeed.

What is Langtrace?

Langtrace is an open-source observability and evaluation tool for AI applications. It supports open-telemetry, so you can self-host and review traces using your existing observability tools (Grafana, Datadog, Elastic, etc.) that support open-telemetry. While Langchain provides Langsmith as an observability option, we’ve found that most developers prefer an open-source stack integration with their existing toolset.

Getting Started with Langtrace and Langchain

At Langtrace, we prioritize simplicity and ease of use. Our platform was designed with developers in mind, providing the necessary tools to support you throughout your development journey. To get started with tracing and debugging your Langchain applications using Langtrace, follow these simple steps:

Install the SDK

pip install langtrace-python-sdk

Import & Initialize Langtrace:

from langtrace_python_sdk import langtrace # Must precede any llm module imports

langtrace.init(api_key = '<LANGTRACE_API_KEY>')

Begin tracing your application and gain valuable insights into its performance and behavior.

Example Application: RAG Q&A Demo

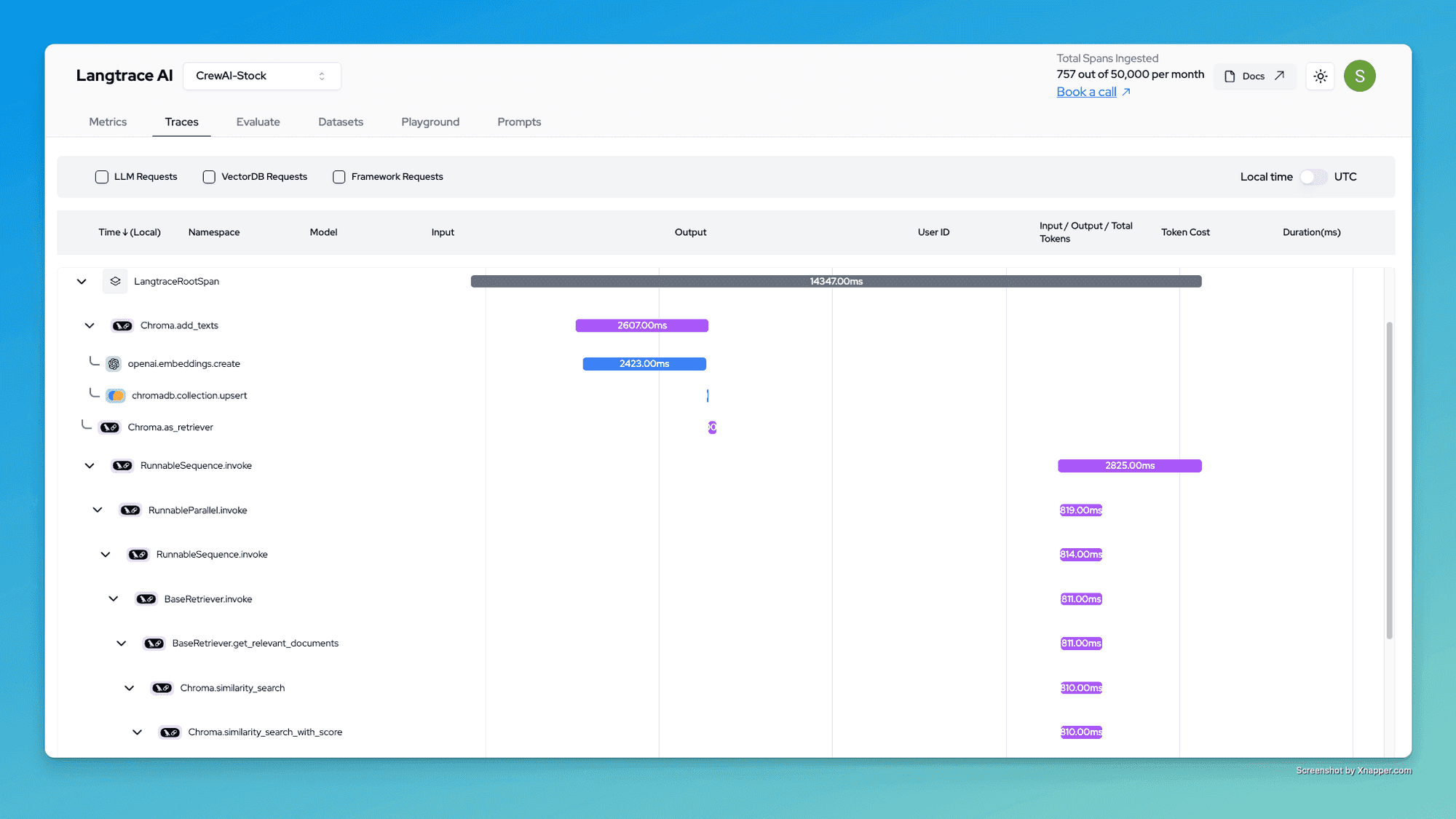

To demonstrate the power of Langtrace with Langchain, we've instrumented traces of a sample RAG Q&A application using Langchain. The sample app is from the popular RAG from Scratch series by Langchain. This application showcases how Langtrace can help you debug and optimize your Langchain applications. In the video below, note that you can see detailed spans from requests made by Langchain and the latency and version of the framework for each call.

Check out our demos: Feel free to check out our demos to try out the same example.

Benefits of Using Langtrace with Langchain

By integrating Langtrace with Langchain, you'll enjoy the following benefits:

Real-time observability: Gain instant insights into your application's performance and behavior. Identify and resolve issues quickly, reducing development time and costs.

Open-source & OpenTelemetry: No vendor lock-in. Integrate Langtrace with your existing observability tools

Ease of onboarding & privacy: It is easy to get started. Also for teams with strict privacy and security requirements, you can self-host your instance of Langtrace. Check out of guide to get started.

Conclusion

We're excited to bring the power of Langtrace to the Langchain community. Our integration empowers developers to build faster, more efficient, and more accurate AI/LLM applications. As an open-source project, we're committed to continuing to improve and expand our features and capabilities. Try Langtrace with Langchain today and give us feedback.

Ready to get started? Install Langtrace and begin tracing your Langchain applications today! If you have any questions or need assistance, please don't hesitate to reach out in-platform or join our Discord.

Happy Langtracing!

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers