New Product Launch…!

Langtrace lite, a lightweight, fully in-browser OTEL-compatible observability dashboard

Transform AI Prototypes into Enterprise-Grade Products

Transform AI Prototypes into Enterprise-Grade Products

Langtrace is an Open Source Observability and Evaluations Platform for AI Agents

Langtrace is an Open Source Observability and Evaluations Platform for AI Agents

Hi! What’s the best way to improve the performance and security of my AI agents?

Hi, thanks for reaching out. You need a combination of observability and evaluations in order to measure the performance and iterate towards better performance and safety with your AI agents. Langtrace is the best platform out there that can help you do this with minimal effort.

How do I set up Langtrace?

You can set up Langtrace by following the steps below: - Create a project and generate an API key - Follow the instructions to install the appropriate SDK and instantiate Langtrace with the API key. Let me know if you have any additional questions.

Thanks! What frameworks do you support?

Langtrace supports CrewAI, DSPy, LlamaIndex & Langchain. We also support a wide range of LLM providers and VectorDBs out of the box.

Chat with joe@acme.co

0s

2

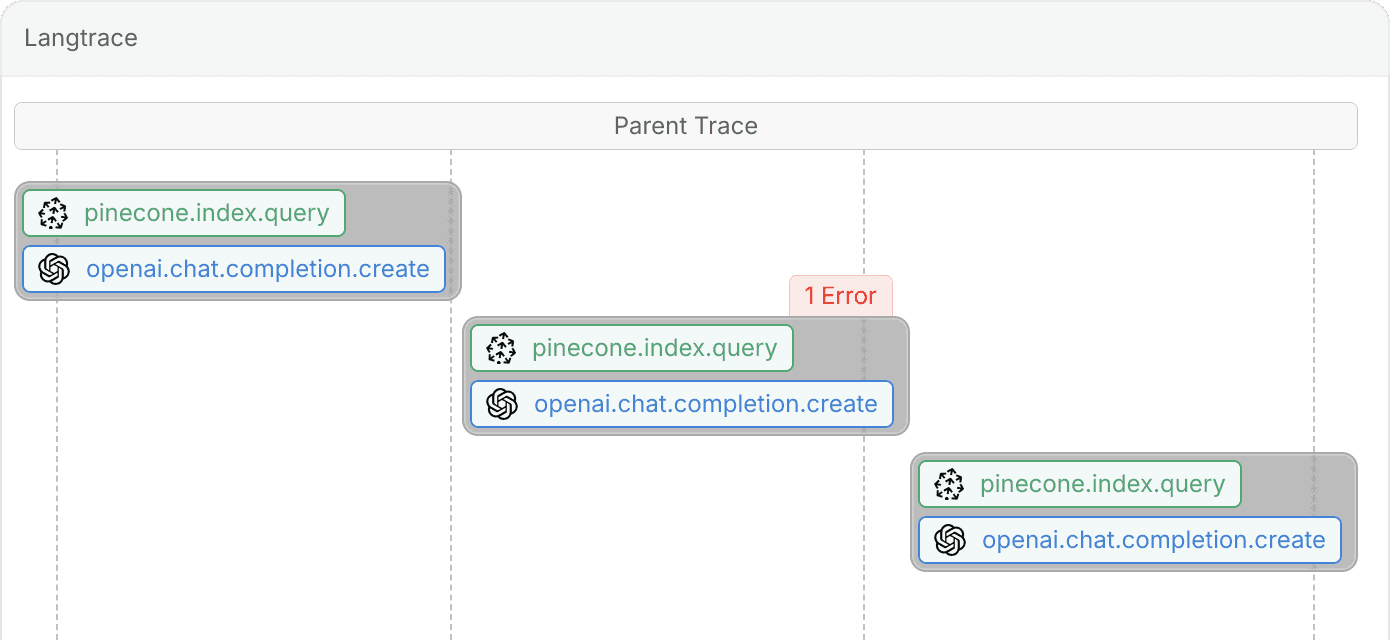

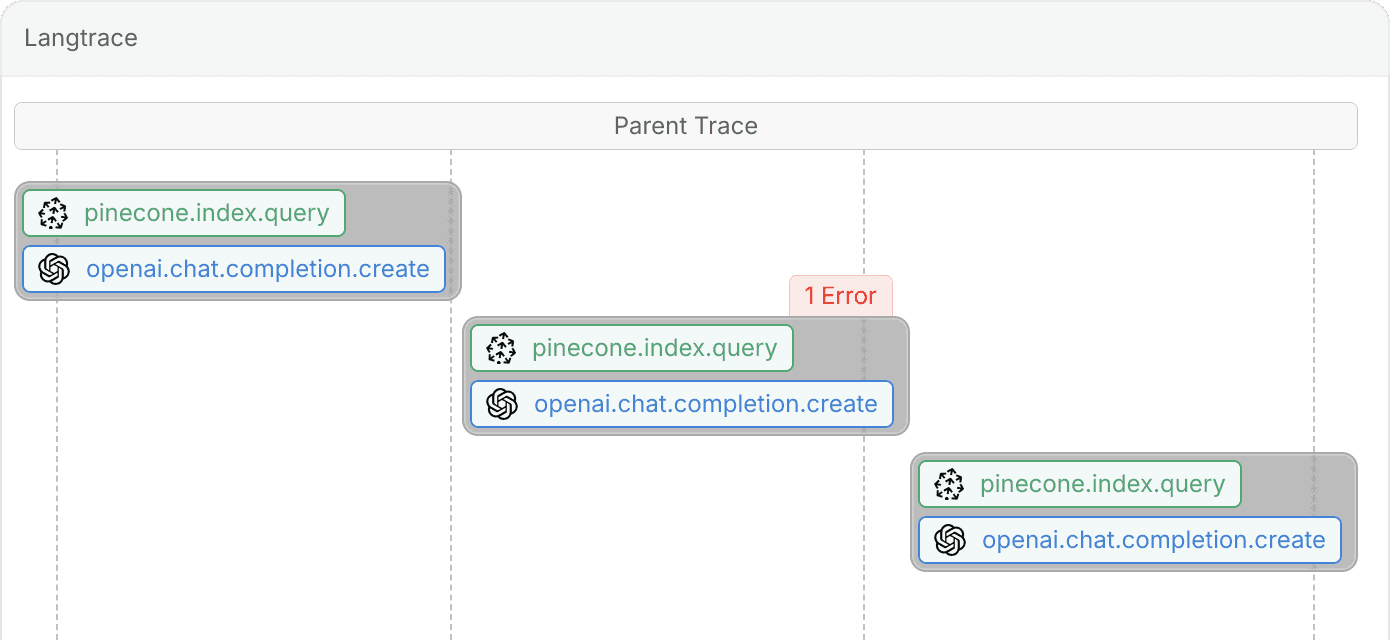

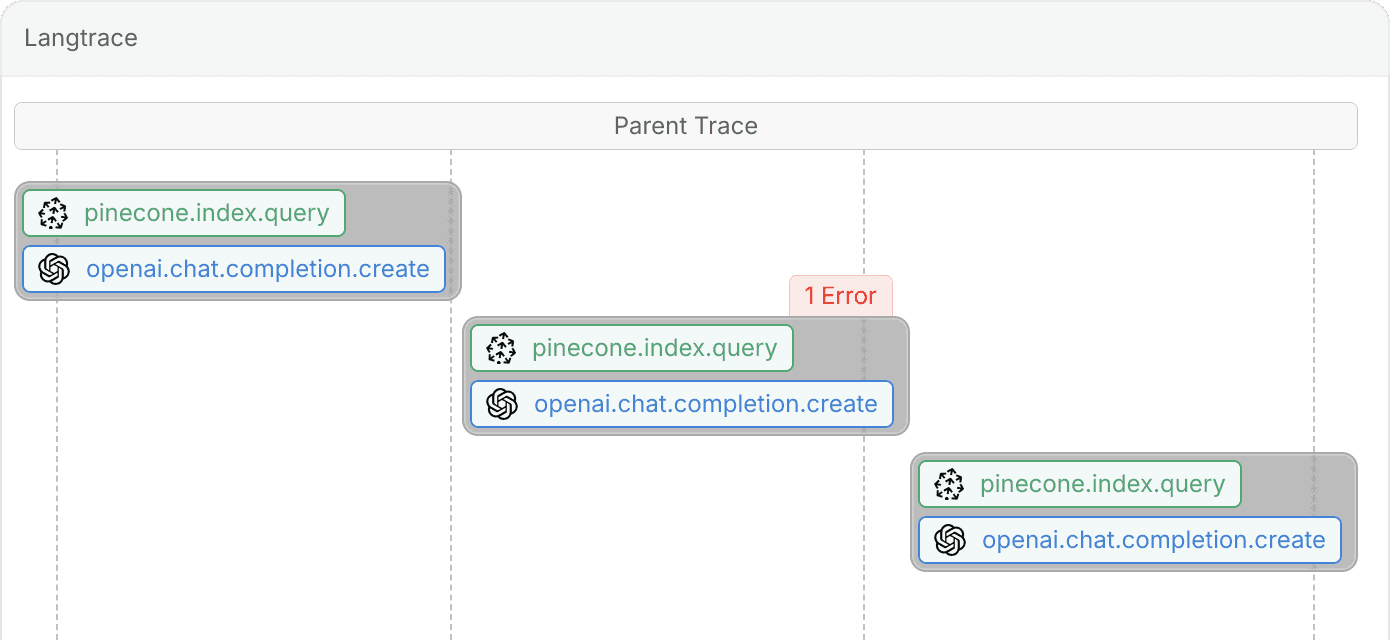

Parent Trace

1200ms

1800ms

Langtrace

pinecone.index.query

openai.chat.completion.create

pinecone.index.query

openai.chat.completion.create

1 Error

pinecone.index.query

openai.chat.completion.create

Simple non-intrusive setup

Access the Langtrace SDK with 2 lines of code with support in Python and TypeScript.

Python

TypeScript

from langtrace_python_sdk import langtrace

langtrace.init(api_key=<your_api_key>)Python

TypeScript

from langtrace_python_sdk import langtrace

langtrace.init(api_key=<your_api_key>)Everything you need, out of the box.

Get the insights and evaluations needed to take your LLM app to the next level

303

Accuracy

+22%

$6,200

Token Cost

+22%

$10,000 budget

75ms

Inference Latency

-16%

Max 120ms

Track Vital Metrics

Dashboards to track token usage, cost, latency and evaluated accuracies.

303

Accuracy

+22%

75ms

Inference Latency

-16%

Max 120ms

Track Vital Metrics

Dashboards to track token usage, cost, latency and evaluated accuracies.

303

Accuracy

+22%

75ms

Inference Latency

-16%

Max 120ms

Track Vital Metrics

Dashboards to track token usage, cost, latency and evaluated accuracies.

gen_ai.usage.prompt_tokens

gen_ai.usage.completion tokens

gen_ai.request.model

gen_ai.usage.total_tokens

gen_ai.request.model

gen_ai.system

db.query.usage.read_units

gen_ai.system

db.query.usage.read_units

gen_ai.request.model

gen_ai.usage.completion tokens

langchain.outputs

gen_ai.usage.total_tokens

gen_ai.request.model

gen_ai.system

gen_ai.usage.completion tokens

gen_ai.request.model

gen_ai.usage.prompt_tokens

Explore API Requests

Automatically trace your GenAI stack and surface relevant metadata.

gen_ai.usage.prompt_tokens

gen_ai.usage.completion tokens

gen_ai.request.model

gen_ai.usage.total_tokens

gen_ai.request.model

gen_ai.system

db.query.usage.read_units

gen_ai.system

db.query.usage.read_units

gen_ai.request.model

gen_ai.usage.completion tokens

langchain.outputs

gen_ai.usage.total_tokens

gen_ai.request.model

gen_ai.system

gen_ai.usage.completion tokens

gen_ai.request.model

gen_ai.usage.prompt_tokens

Explore API Requests

Automatically trace your GenAI stack and surface relevant metadata.

Evaluations

Measure baseline performance, curate datasets for automated evaluations and finetuning

Evaluations

Measure baseline performance, curate datasets for automated evaluations and finetuning

v1.74

GPT-4o

Prompt A

v1.74

GPT-4o

Prompt B

v1.74

GPT-4o

Prompt B

Prompt Version Control

Store and version control your prompts. Deploy new prompts or roll back with just a few clicks.

v1.74

GPT-4o

Prompt A

v1.74

GPT-4o

Prompt B

v1.74

GPT-4o

Prompt B

Prompt Version Control

Store and version control your prompts. Deploy new prompts or roll back with just a few clicks.

Prompt

You are a document parser. Your job is to read the document and parse it in …

Playground

Compare the performance of your prompts across different models.

Prompt

You are a document parser. Your job is to read the document and parse it in …

Playground

Compare the performance of your prompts across different models.

Enterprise-grade Security

Enterprise-grade Security

Designed to help enterprises of any size to deploy AI apps safely.

Designed to help enterprises of any size to deploy AI apps safely.

Private & Secure

Proven, industry-leading security protocols protect your data with the enterprise-grade encryption standards.

Compliant

SOC2 Type II certified, meeting stringent compliance requirements for robust data protection.

Open Source

Our solutions empower you to customize, audit, and contribute, fostering innovation and trust in every line of code.

Proudly Open Source

Proudly Open Source

Our source code is fully accessible on GitHub—explore, review, and contribute as you see fit, and help us shape the future together!

Our source code is fully accessible on GitHub—explore, review, and contribute as you see fit, and help us shape the future together!

Langtrace are not just a genai adoption story, but also a story that a humble, persistent opensource community can coexist in a highly competitive, emerging space.

Adrian Cole

Principal Engineer, Elastic

Langtrace are not just a genai adoption story, but also a story that a humble, persistent opensource community can coexist in a highly competitive, emerging space.

Adrian Cole

Principal Engineer, Elastic

Langtrace are not just a genai adoption story, but also a story that a humble, persistent opensource community can coexist in a highly competitive, emerging space.

Adrian Cole

Principal Engineer, Elastic

It was a very easy, quick integration. Kudos to you guys for that. It doesn't take a lot to reflect. That was a fun thing.

Aman Purwar

Founding Engineer, Fulcrum AI

It was a very easy, quick integration. Kudos to you guys for that. It doesn't take a lot to reflect. That was a fun thing.

Aman Purwar

Founding Engineer, Fulcrum AI

It was a very easy, quick integration. Kudos to you guys for that. It doesn't take a lot to reflect. That was a fun thing.

Aman Purwar

Founding Engineer, Fulcrum AI

I have been using Langtrace for a couple of months now and it is the real deal. They also have a real plan for helping businesses with privacy by ensuring on-prem installs. It's definitely worth trying out.

Steven Moon

Founder, Aech AI

I have been using Langtrace for a couple of months now and it is the real deal. They also have a real plan for helping businesses with privacy by ensuring on-prem installs. It's definitely worth trying out.

Steven Moon

Founder, Aech AI

I have been using Langtrace for a couple of months now and it is the real deal. They also have a real plan for helping businesses with privacy by ensuring on-prem installs. It's definitely worth trying out.

Steven Moon

Founder, Aech AI

We looked around for observability platform for our DSPy based application but we could not find anything that would be easy to setup and intuitive. Until I stumbled upon Langtrace. It already helped us to solve a few bugs.

Denis Ergashbaev

CTO, Salomatic

We looked around for observability platform for our DSPy based application but we could not find anything that would be easy to setup and intuitive. Until I stumbled upon Langtrace. It already helped us to solve a few bugs.

Denis Ergashbaev

CTO, Salomatic

We looked around for observability platform for our DSPy based application but we could not find anything that would be easy to setup and intuitive. Until I stumbled upon Langtrace. It already helped us to solve a few bugs.

Denis Ergashbaev

CTO, Salomatic

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers

Langtrace – Improve your LLM apps

Langtrace – Improve your LLM apps

Langtrace – Improve your LLM apps