Monitoring a Langchain, ElasticSearch, Azure OpenAI RAG Application with Langtrace and Elastic APM

Karthik Kalyanaraman

⸱

Cofounder and CTO

Dec 10, 2024

Introduction

This guide outlines the steps to set up a Retrieval-Augmented Generation (RAG) chatbot application. The chatbot leverages Elastic Search for document search, Azure OpenAI for generating responses, and Langtrace for observability into Elastic APM. You can run this Chatbot using the code that can be found in this github repository.

Step 1: Set Environment Variables for OpenTelemetry Traces

Set up the following environment variables to enable OpenTelemetry tracing and send traces to Elastic APM:

Replace OTEL_EXPORTER_OTLP_ENDPOINT and <secret> with the appropriate values for your Elastic APM setup.

Step 2: Install and Set Up Langtrace

Install the Langtrace Python SDK in your application environment:

Next, integrate Langtrace into your Flask app. Open your app's app.py file and add the following code at the top:

This enables automatic tracing and observability for your application.

Step 3: Configure Azure OpenAI Environment Variables

Ensure the necessary Azure OpenAI environment variables are set up:

Replace placeholders with the respective values from your Azure OpenAI setup.

Step 4: Run the Flask Application

Start the Flask application by running the following command:

This will start your RAG application, making it available for interaction.

Step 5: Use the Chatbot

Interact with the chatbot by asking questions. The chatbot will use Elastic Search to retrieve relevant documents and Azure OpenAI to generate responses.

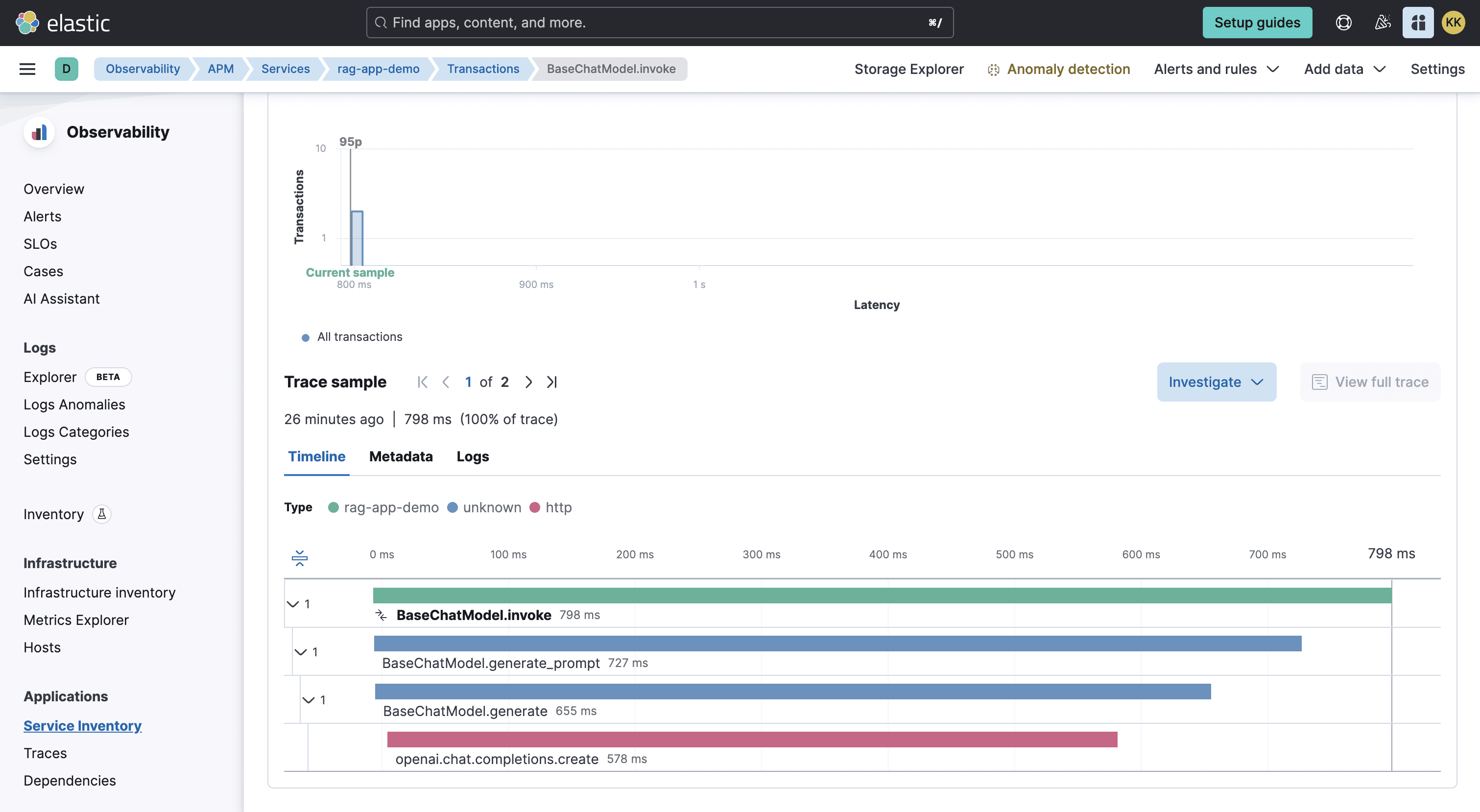

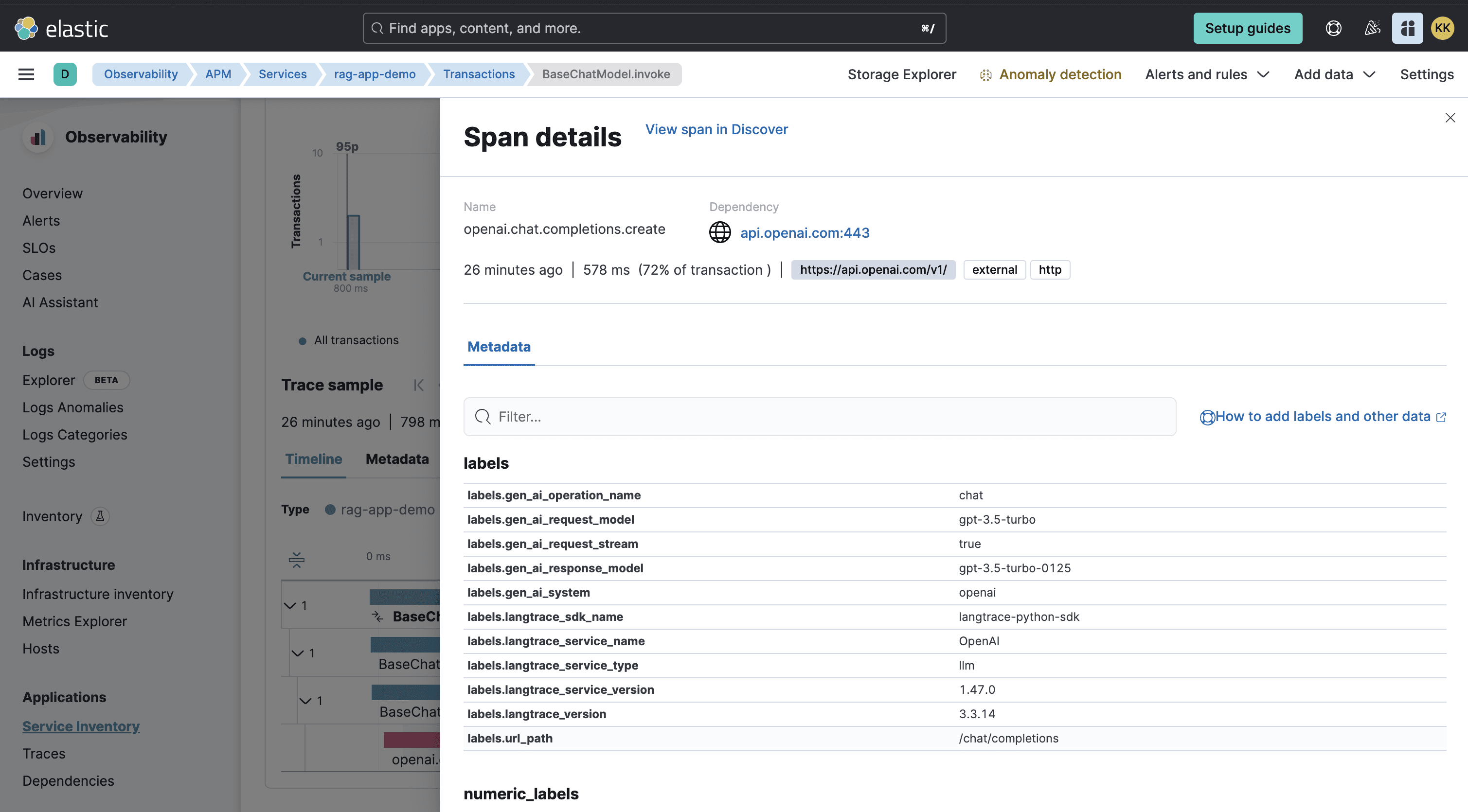

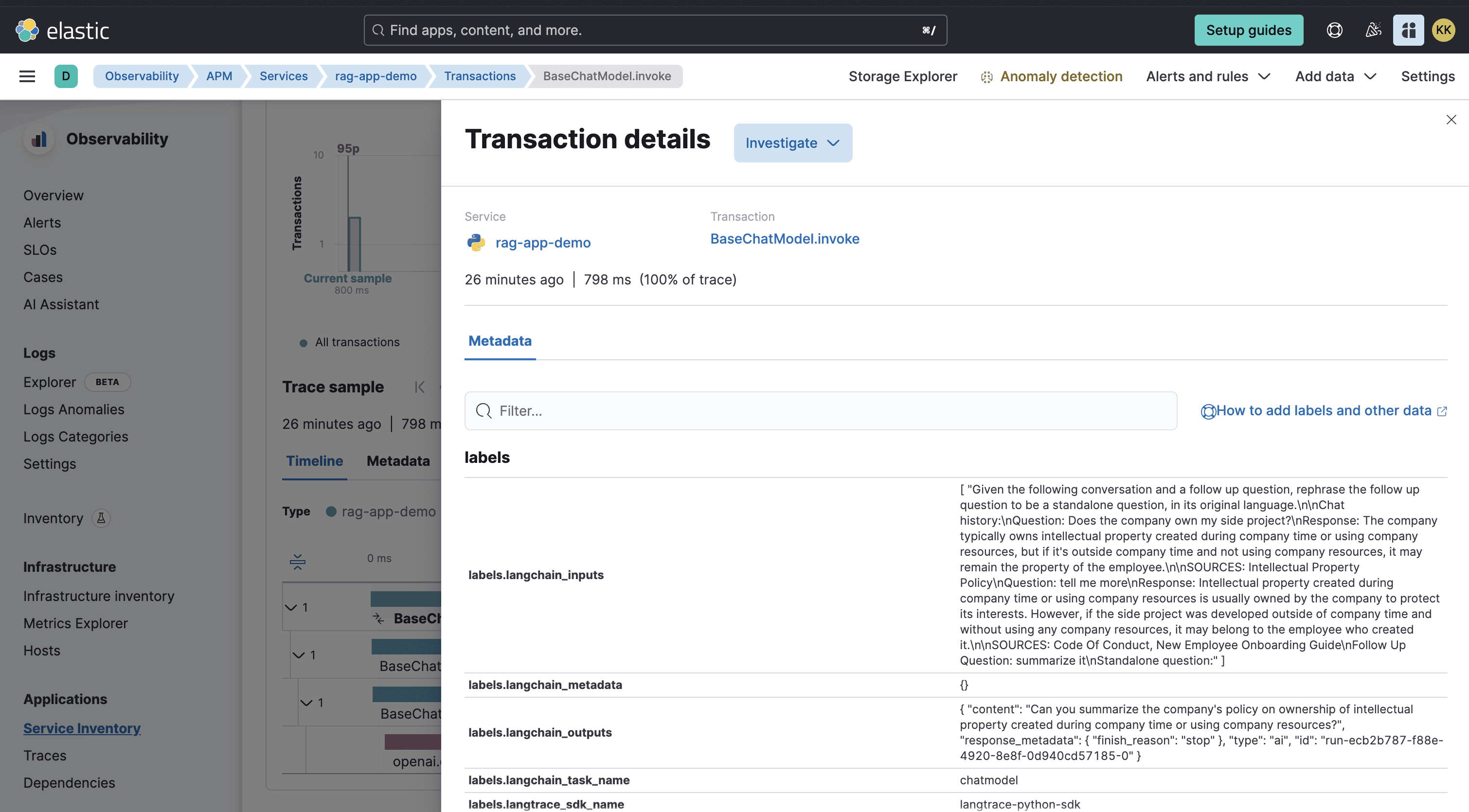

Step 6: Observe Traces in Elastic APM

After using the chatbot, navigate to your Elastic APM dashboard. You’ll see detailed traces of your application, including interactions with Elastic Search, Azure OpenAI, and the RAG logic. Use these traces for debugging, performance monitoring, and optimization.

Elastic APM traces dashboard

Conclusion

By following these steps, you can set up and observe a fully functional RAG chatbot application with integrated observability.

Useful Resources

Getting started with Langtrace https://docs.langtrace.ai/introduction

Langtrace Website https://langtrace.ai/

Langtrace Discord https://discord.langtrace.ai/

Langtrace Github https://github.com/Scale3-Labs/langtrace

Langtrace Twitter(X) https://x.com/langtrace_ai

Langtrace Linkedin https://www.linkedin.com/company/langtrace/about/

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers