Introducing Langtrace: Open-source LLM Observability Tool

Jay Thakrar

⸱

Head of Product and Strategy

Apr 2, 2024

Despite LLMs being a market-transformative technology that has made the weekly headlines throughout 2023 and 2024, a majority of enterprises currently do not have the confidence to adopt the technology in production-grade applications for internal and external use cases. This is largely attributable to a variety of factors, including customization, control, accuracy, usage, and costs.

What is Langtrace?

In order to bolster enterprises’ confidence in LLM-powered applications, we’re excited to introduce Langtrace - a fully open-source software that enables you to trace, evaluate, manage prompts and datasets, and debug any issues related to an LLM’s performance in your application. Langtrace aims to bridge the gap between the intricate workings of LLM-powered applications and the developers and researchers who utilize them.

Why Open Source LLM Application Observability Matters?

Open source tooling is crucial for monitoring large language model (LLM) applications because it ensures transparency, enhances flexibility and security, and enables community-driven innovation.

Transparency allows users to understand and trust the monitoring processes.

Flexibility enables LLM application developers to customize solutions for their application needs, positioning them to adapt to the field's rapid evolution. For example, for large enterprises, data privacy and security are top of mind. Given that Langtrace is fully open sourced, enterprises can self-host and leverage Langtrace for end-to-end observability for their LLM-powered applications, in turn protecting their data privacy while ensuring effective use of the underlying LLMs.

Community contributions drive faster innovation, pooling diverse expertise to tackle challenges and improve monitoring effectiveness.

This collective approach not only advances the technology but also promotes a culture of responsibility and ethical standards in AI development.

Key Benefits and Features

As of today, Langtrace offers a handful of key features and benefits, including:

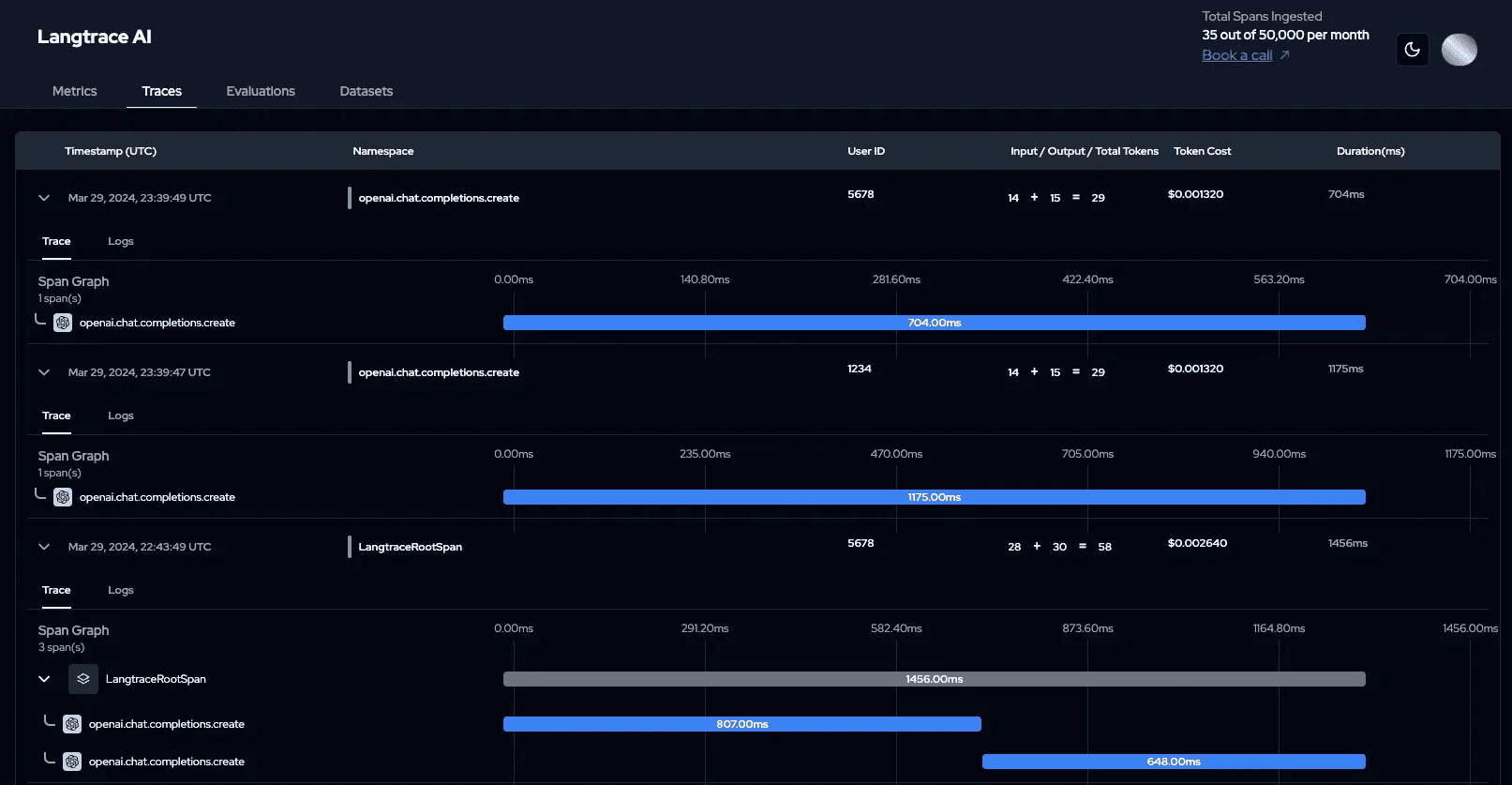

Detailed tracing and logs: Leverage OpenTelemetry standard traces across the most popular frameworks, LLMs, and vector databases to better understand the pathways and transformations within your LLM applications, shedding light on how inputs are processed into outputs.

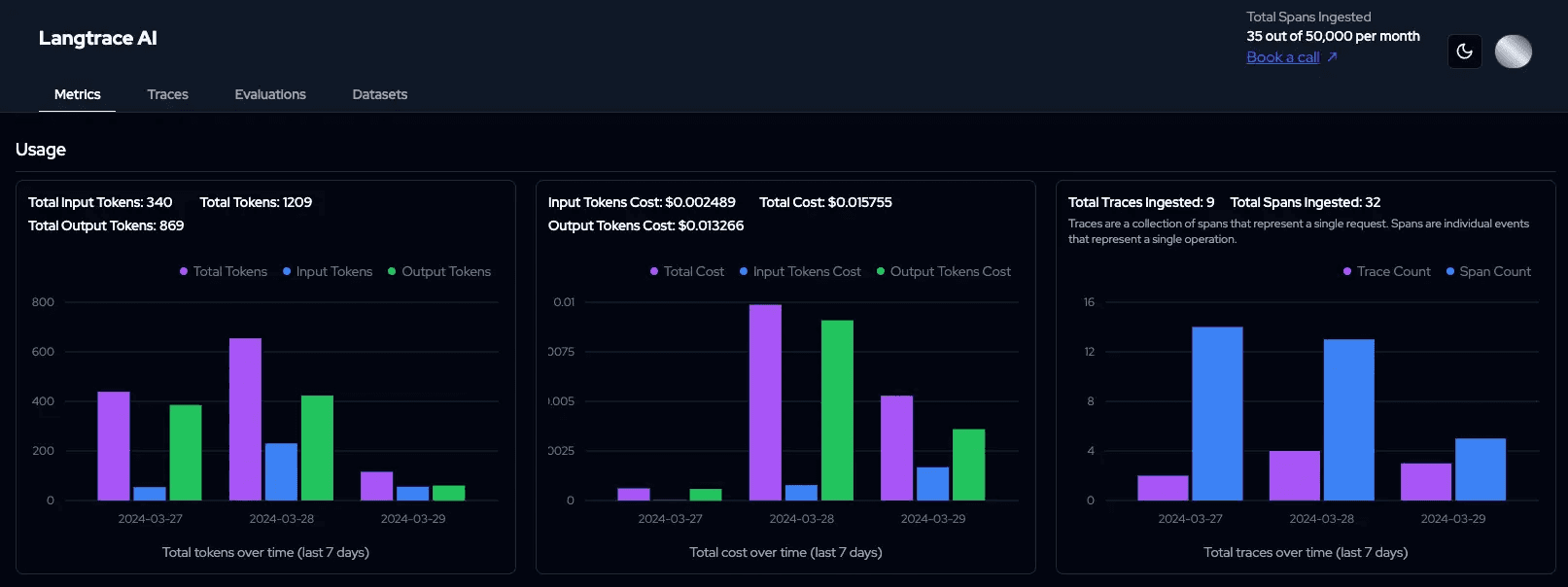

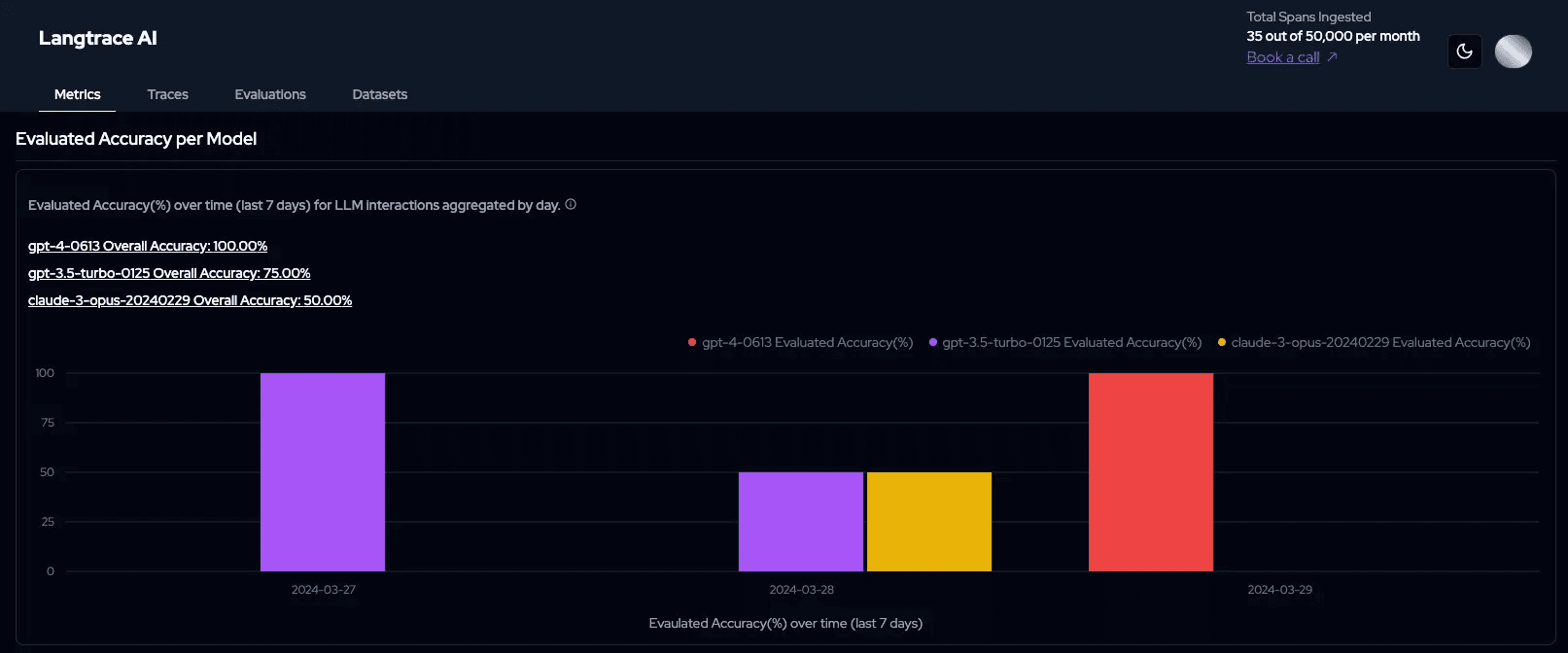

Real-time Monitoring of Key Metrics: Langtrace’s tools provide real-time monitoring of key metrics, including (1) costs and usages, (2) accuracy, and (3) response times (latency).

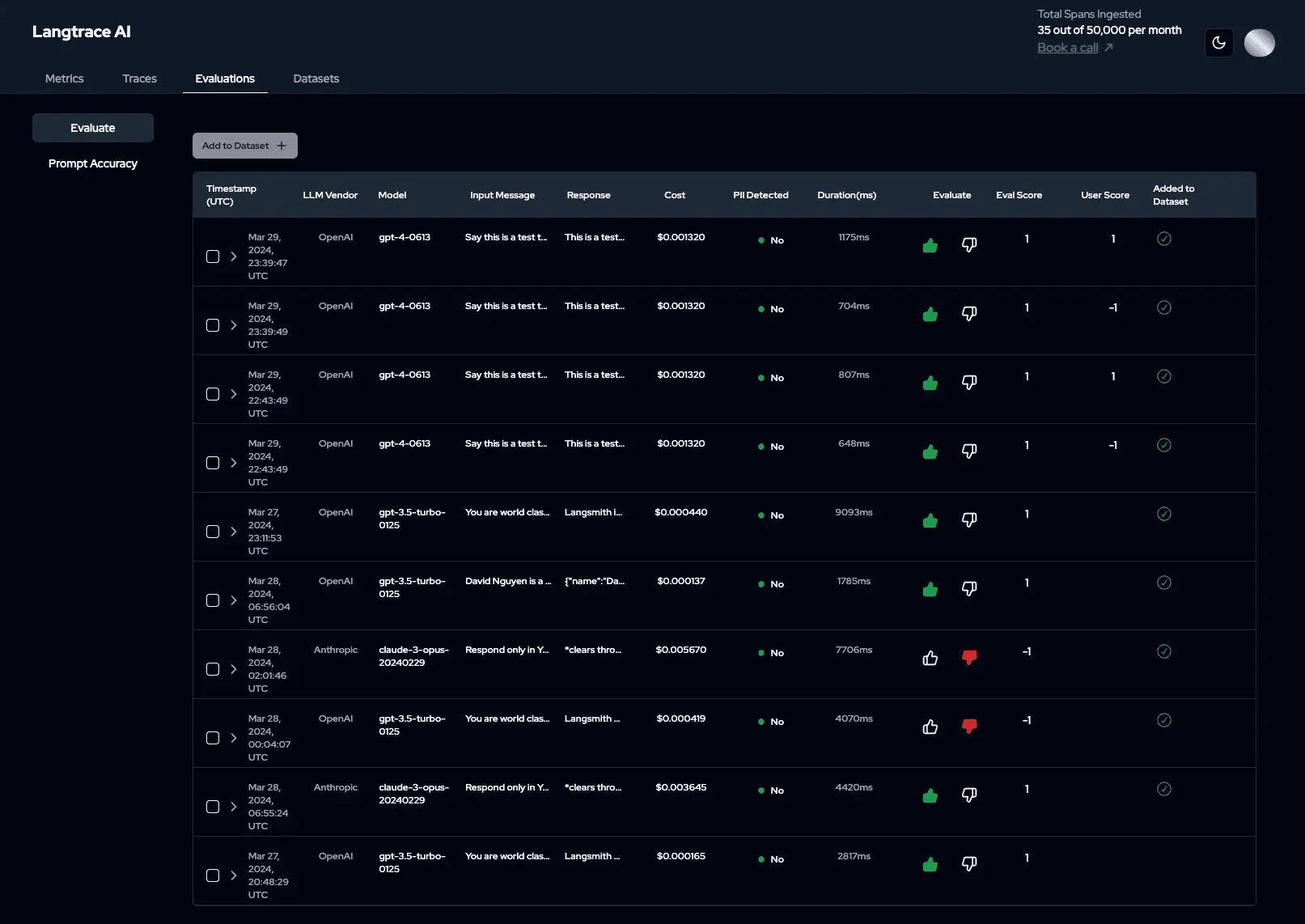

Evaluations: Using Langtrace’s tools, developers and users can manually score the outputs of an LLM application, enabling developers of the LLM application to get a holistic evaluation of an LLM application’s performance.

Open Source with OpenTelemetry Standards: As an open-source project, Langtrace encourages community collaboration and contributions. Users can customize the tool to their specific needs, share improvements, and benefit from the collective wisdom of the community. Additionally, Langtrace is built with OpenTelemetry standards, avoiding vendor-lock in while equipping developers with the ability to integrate with any observability platform of their choice - Signoz, Grafana, Datadog, Splunk, Logz, and the likes.

Integrations: As of today, Langtace currently supports the following:

Frameworks - Langchain, LlamaIndex

Vector Databases - Pinecone, ChromaDB

LLMs - OpenAI, Azure OpenAI, Anthropic

What's Next?

Langtrace is fully open sourced, and as a result, its key features and benefits will continue to evolve and improve based on community / user feedback. Some of the upcoming features include:

Integrations with leading LLMs, such as Cohere, Google’s VertexAI, Amazon’s Bedrock, and Hugging Face

Customizable Alerts

CSV Download for data sets and prompts

Ability to take action on LLM responses in production directly from Langtrace’s console

Train the dataset on a hosted open-source model and provide seamless options to switch to/from LLMs, as desired

“LLM kill switch” for human intervention, particularly when an LLM hallucinates

How to Get Started?

Langtrace is more than just a tool; it's a community project - your contributions can help shape the future of LLM application observability. We encourage you to explore Langtrace, contribute to its development, and join us in our mission to make LLM applications more accessible and comprehensible.

Browse through the Langtrace documentation to learn more about how to get started with using Langtrace for your LLM applications. You can get started in 3 steps:

Create a project

Generate an API Key

Install the SDK to start shipping traces

Get Involved

Additionally, join the Langtrace community to ask any questions regarding LLM application observability, learn more about the latest product releases, connect with peers, and get your support questions answered.

Lastly, discover more about how you can contribute to or benefit from Langtrace by visiting our GitHub repository. Follow us on Twitter and join the Langtrace Discord to stay informed and get involved!

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers