Integrate Langtrace with Arch

Obinna Okafor

⸱

Software Engineer

Jan 17, 2025

Introduction

We're excited to announce that Langtrace now supports Arch gateway. This means, Langtrace will automatically capture traces and metrics originating from Arch.

Arch is an intelligent gateway designed to protect, observe and personalize AI agents with your APIs. Arch is engineered with specialized (sub-billion) LLMs that are optimized for fast, cost-effective and accurate handling of prompts and are designed to run alongside your application servers as a self-contained process.

Setup

Prerequisites

Before you begin installing and running Arch, ensure you have the following:

Docker System (v24)

Docker compose (v2.29)

You'll be using Arch's CLI to manage and interact with the Arch gateway. To install the CLI, run the following command:

Note: We recommend you create a new Python environment to isolate dependencies. To do that, run the following:

Install Arch

Create arch config file (arch_config.yaml):

Start the arch gateway with the config file

Once the gateway is up you can start interacting with at port 12000 using OpenAI's client (Arch is openai client compatible)

Before we start making LLM requests, we need to do the following:

Sign up to Langtrace, create a project and get a Langtrace API key.

Install the Langtrace SDK.

Setup environment variables:

Now we can start making requests

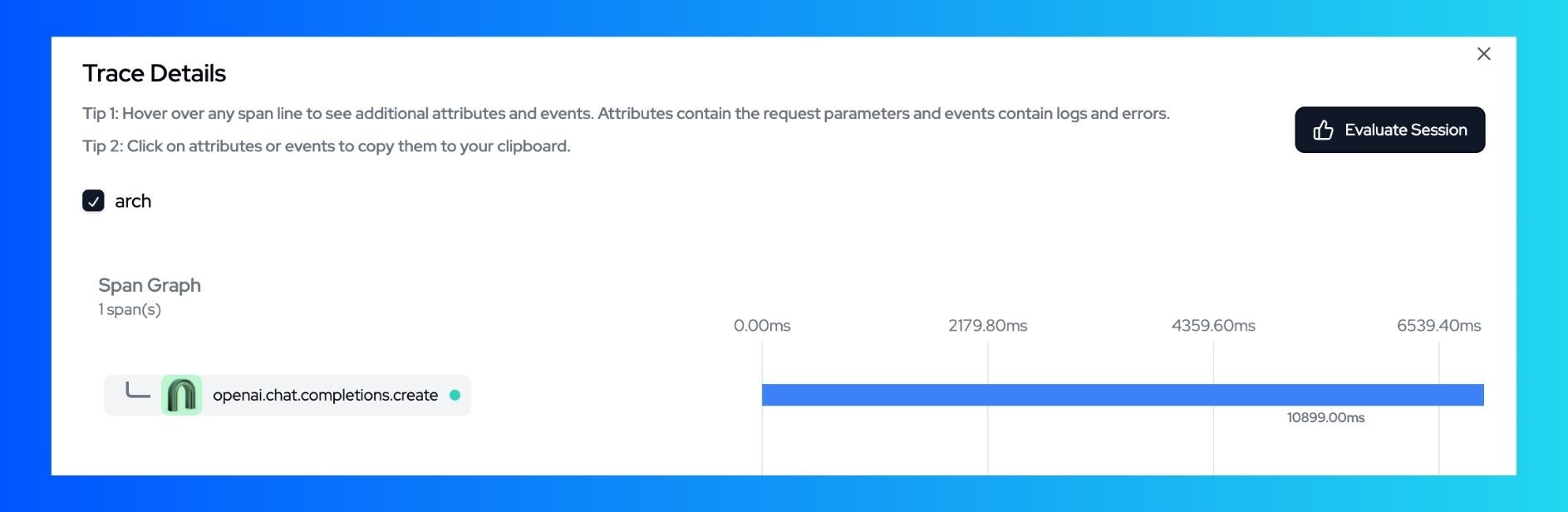

Now you can see the traces in Langtrace.

Useful Resources

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers