Evaluate CrewAI Agents using Langtrace

Karthik Kalyanaraman

⸱

Cofounder and CTO

Dec 20, 2024

Introduction

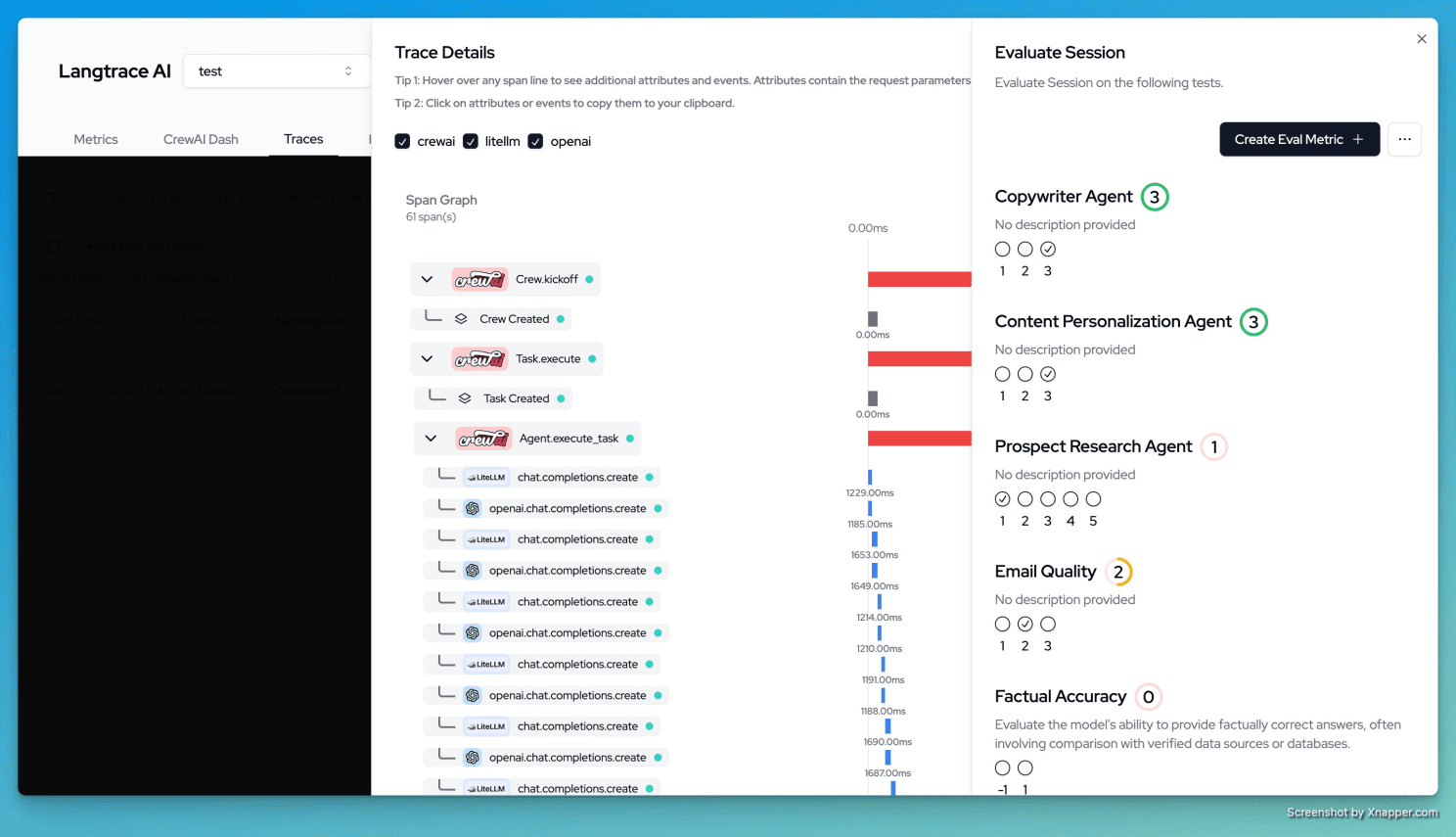

With the latest update, you can now evaluate both entire agent sessions and individual operations across your entire agent stack—tool calls, VectorDB retrievals, and LLM inferences.

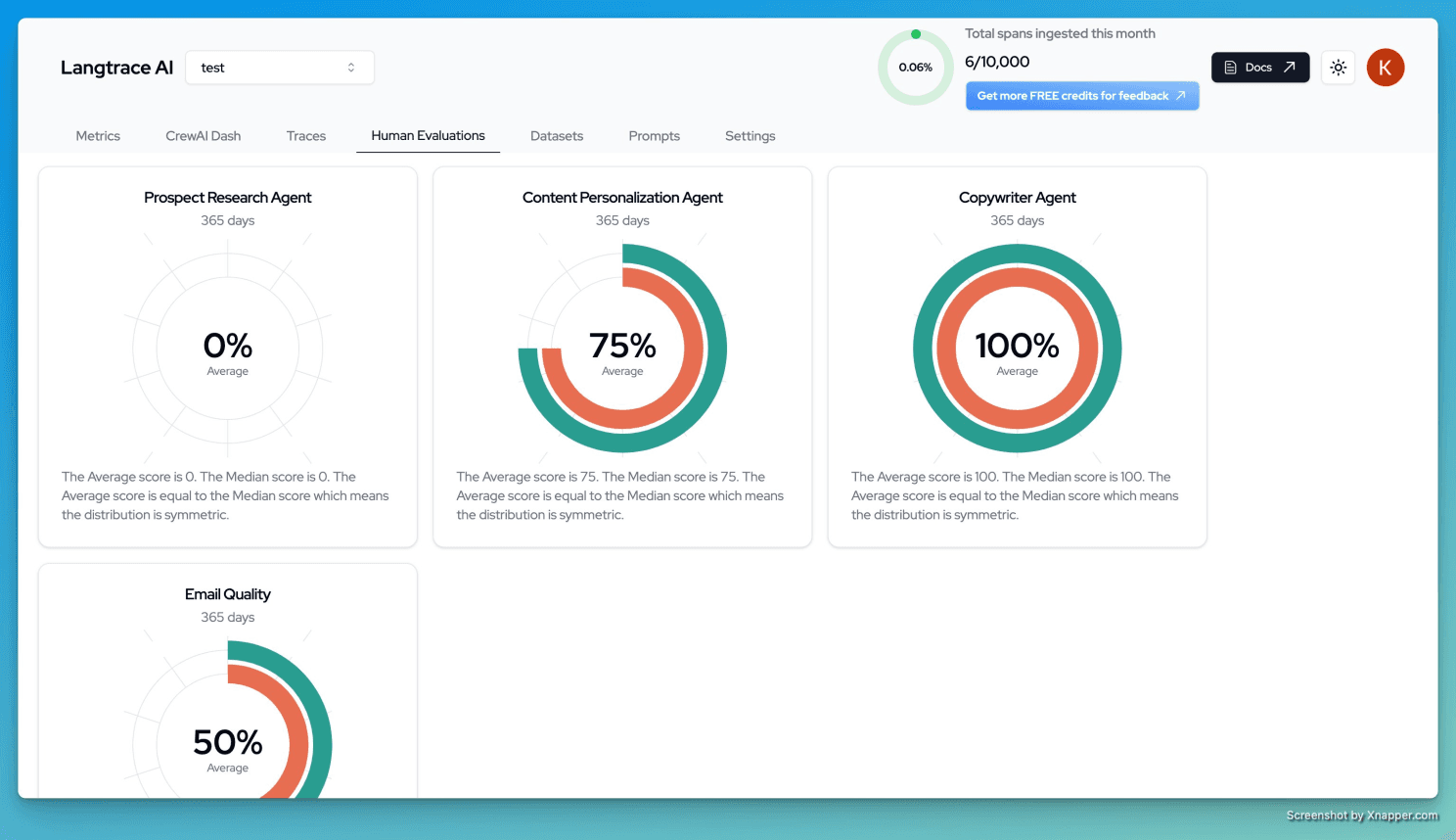

For instance, evaluating the performance of individual agents in a CrewAI-powered setup provides insights into how each agent is performing. By aggregating median and average scores, you can easily spot outlier agent runs—where a higher or lower median compared to the average signals performance anomalies.

Track and optimize agent performance with precision across every aspect of your AI operations.

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers