Does High Traffic Affect the Accuracy of GPT-4?

Dylan Zuber

⸱

Software Engineer

Apr 30, 2024

Introduction

In the evolving landscape of artificial intelligence, the reliability and performance of large language models (LLMs) such as OpenAI's GPT-4 are under continuous scrutiny. A common question posed by many is whether these models maintain their accuracy during peak traffic periods. To explore this, we embarked on an empirical journey using the Measuring Massive Multitask Language Understanding (MMMLU) dataset, analyzing the performance of GPT-4 over a week. Here's what we discovered.

Methodology

We conducted an experiment where GPT-4 was tasked with answering 25 questions drawn from five distinct sets of data from the MMMLU dataset every hour for seven consecutive days. We used fives questions from each of the following datasets from MMMLU:

prehistory_test

us_foreign_policy_test

high_school_geography_test

moral_scenarios_test

college_physics_test

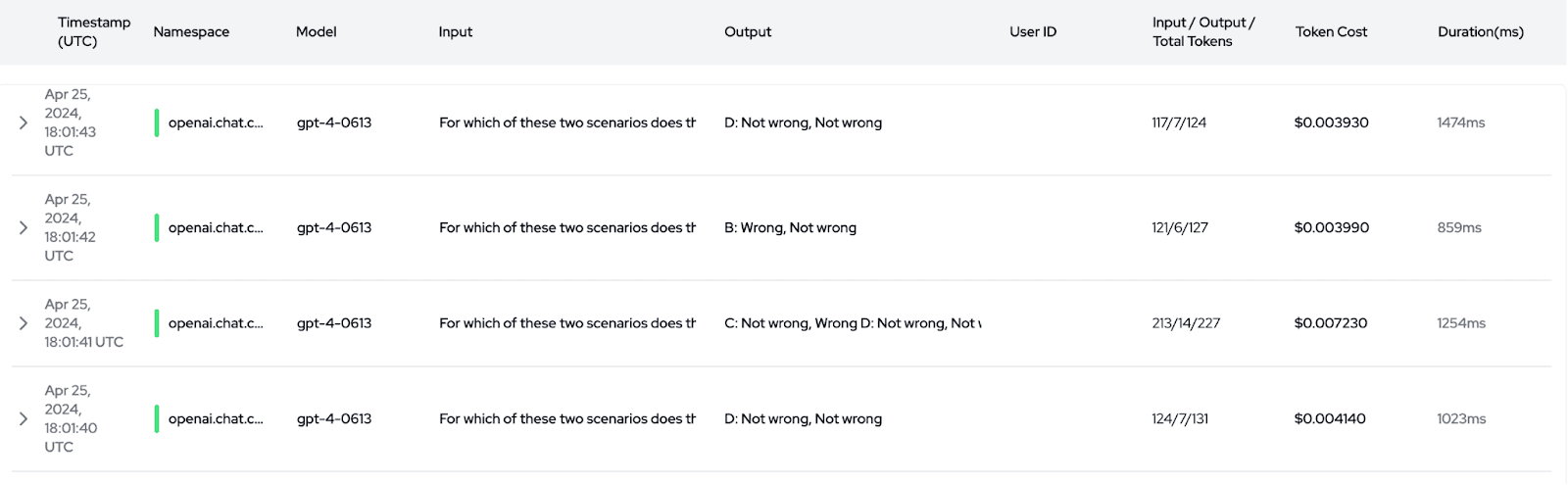

This schedule meant that the model was tested at various times of the day, including what we presumed to be peak traffic periods. To facilitate this study, we employed Langtrace, an OpenTelemetry-based observability tool specifically designed for LLMs. Langtrace was instrumental in helping us meticulously track all requests and responses throughout the data collection phase, ensuring accurate capture and analysis of the model's performance across different times of the day.

Results

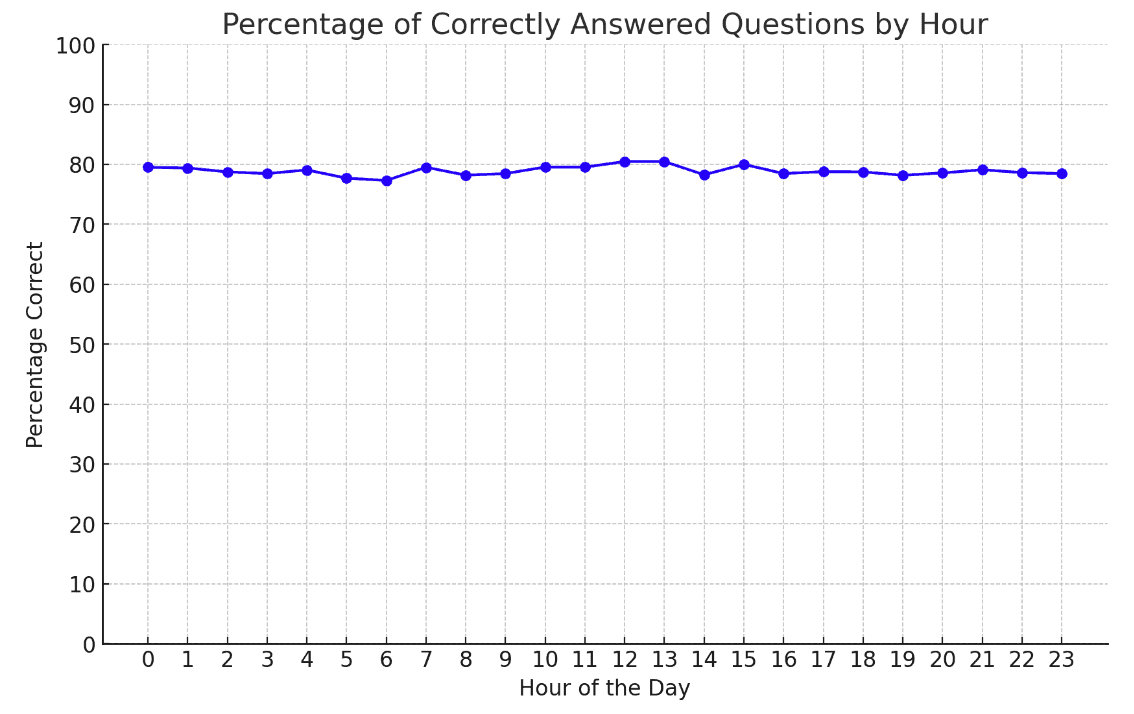

Here's a snapshot of the model's hourly performance throughout the day, represented in percentages of correct responses (times are in UTC):

Midnight to 6 AM: The scores hovered around 77% to 79% accuracy, with the lowest at 77.31% at 6 AM and peaking at 79.53% at midnight.

7 AM to Noon: A slight increase was observed around 9 AM, but the peak accuracy for this period came at noon, tying at 80.47%.

Afternoon: Post-noon, the accuracy slightly dipped and rose, peaking again at 3 PM with 80.47%.

Evening to Late Night: The evening hours showed less fluctuation, maintaining a steady performance with minimal dips.

TL;DR: The results demonstrate a maximum fluctuation of only 2.5% in accuracy throughout the day. Interestingly, there were no significant dips during expected peak hours, suggesting that traffic load does not substantially impact the accuracy of GPT-4.

Analysis

These findings are intriguing because they challenge the assumption that LLMs might struggle under heavy user loads. It appears that the infrastructure supporting these models is robust enough to handle fluctuations in demand without degrading performance. This is crucial for businesses relying on LLMs for customer service, content creation, and other real-time applications.

Conclusion

The experiment presents a clear picture: the accuracy of GPT-4 remains stable throughout the day, even during presumed peak traffic periods. For companies leveraging GPT-4, this means you can expect consistent performance regardless of the time of day or user load. This reliability not only builds trust in AI technologies but also encourages wider adoption across different sectors.

Our study, bolstered by the capabilities of Langtrace to capture and analyze data with high fidelity, adds valuable data to the ongoing debate about the scalability and reliability of GPT-4 and paves the way for further research into other aspects of AI performance under various operational conditions. In the future we plan to conduct similar studies for other popular LLMs.

We'd love to hear your feedback on our GPT-4 reliability analysis! We invite you to join our community on Discord or reach out at support@langtrace.ai and share your experiences, insights, and suggestions. Together, we can continue to set new standards of observability in LLM development.

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers